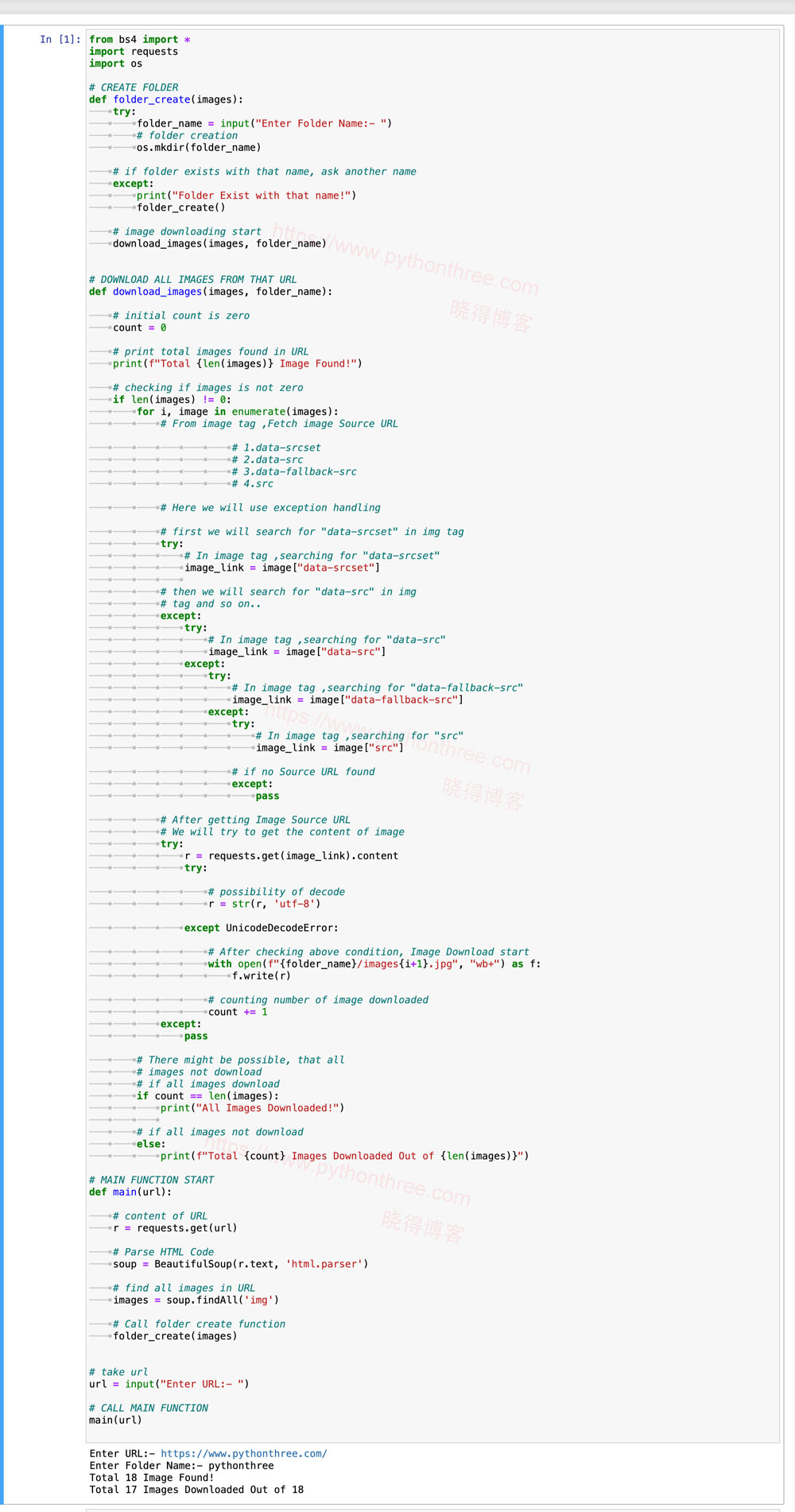

如何用Python下载网页上图像

网络抓取是一种从网站获取数据的技术。在网上冲浪时,许多网站不允许用户保存数据供个人使用。一种方法是手动复制粘贴数据,这既乏味又耗时。网页抓取是从网站提取数据过程的自动化。在本文中,我们将讨论如何用Python下载网页上图像。

所需模块

- bs4: Beautiful Soup(bs4) 是一个用于从 HTML 和 XML 文件中提取数据的 Python 库。该模块不是 Python 内置的。

- requests: Requests 允许您极其轻松地发送 HTTP/1.1 请求。该模块也不是内置于 Python 中的。

- os: python中的OS模块提供了与操作系统交互的功能。OS,属于Python 的标准实用程序模块。该模块提供了一种使用操作系统相关功能的可移植方式。

方法

- 导入模块

- 获取 HTML 代码

使用 Beautiful Soup 中的findAll方法从 HTML 代码中获取img标签列表。

images = soup.findAll('img')使用 os 中的mkdir方法创建单独的文件夹用于下载图像。

os.mkdir(folder_name)遍历所有图像并获取该图像的源 URL。获取源URL后,最后一步是下载图像获取图像内容

r = requests.get(Source URL).content使用文件处理下载图像

# Enter File Name with Extension like jpg, png etc..

with open("File Name","wb+") as f:

f.write(r)推荐:[最新版]Filter Everything插件下载WordPress通用过滤器插件

使用Python下载网页上图像

from bs4 import *

import requests

import os

# CREATE FOLDER

def folder_create(images):

try:

folder_name = input("Enter Folder Name:- ")

# folder creation

os.mkdir(folder_name)

# if folder exists with that name, ask another name

except:

print("Folder Exist with that name!")

folder_create()

# image downloading start

download_images(images, folder_name)

# DOWNLOAD ALL IMAGES FROM THAT URL

def download_images(images, folder_name):

# initial count is zero

count = 0

# print total images found in URL

print(f"Total {len(images)} Image Found!")

# checking if images is not zero

if len(images) != 0:

for i, image in enumerate(images):

# From image tag ,Fetch image Source URL

# 1.data-srcset

# 2.data-src

# 3.data-fallback-src

# 4.src

# Here we will use exception handling

# first we will search for "data-srcset" in img tag

try:

# In image tag ,searching for "data-srcset"

image_link = image["data-srcset"]

# then we will search for "data-src" in img

# tag and so on..

except:

try:

# In image tag ,searching for "data-src"

image_link = image["data-src"]

except:

try:

# In image tag ,searching for "data-fallback-src"

image_link = image["data-fallback-src"]

except:

try:

# In image tag ,searching for "src"

image_link = image["src"]

# if no Source URL found

except:

pass

# After getting Image Source URL

# We will try to get the content of image

try:

r = requests.get(image_link).content

try:

# possibility of decode

r = str(r, 'utf-8')

except UnicodeDecodeError:

# After checking above condition, Image Download start

with open(f"{folder_name}/images{i+1}.jpg", "wb+") as f:

f.write(r)

# counting number of image downloaded

count += 1

except:

pass

# There might be possible, that all

# images not download

# if all images download

if count == len(images):

print("All Images Downloaded!")

# if all images not download

else:

print(f"Total {count} Images Downloaded Out of {len(images)}")

# MAIN FUNCTION START

def main(url):

# content of URL

r = requests.get(url)

# Parse HTML Code

soup = BeautifulSoup(r.text, 'html.parser')

# find all images in URL

images = soup.findAll('img')

# Call folder create function

folder_create(images)

# take url

url = input("Enter URL:- ")

# CALL MAIN FUNCTION

main(url)